21. Components » Packet Filter¶

The functionality of Packet Filter is described in depth in the Choosing a Method of DDoS Mitigation chapter.

To add a Packet Filter, click the [+] button found in the title bar of the Configuration » Components panel. To configure an existing Packet Filter, go to Configuration » Components and click its name.

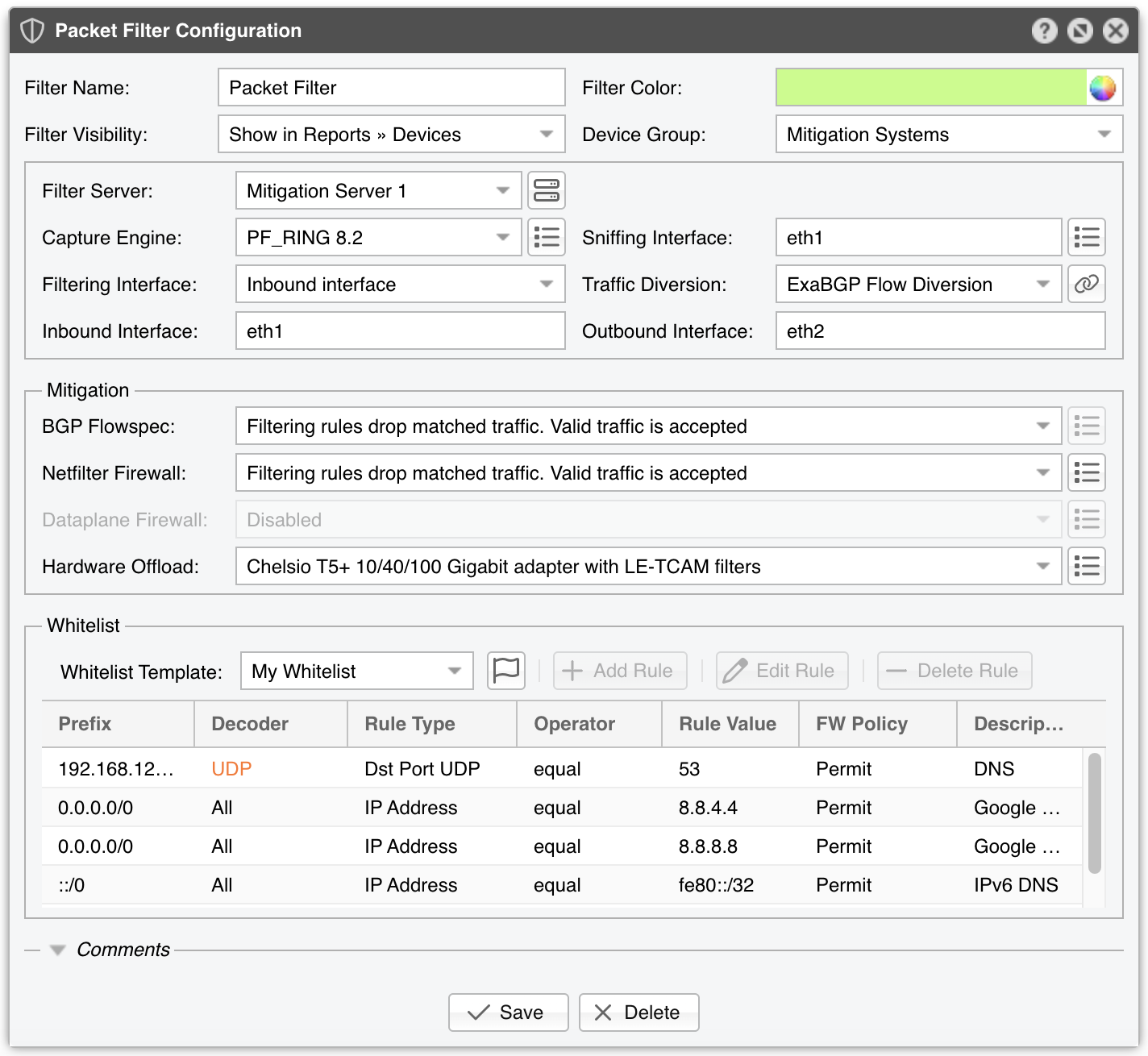

Packet Filter Configuration parameters:

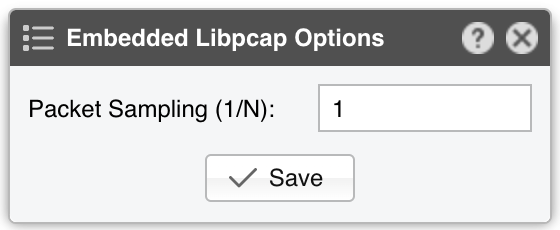

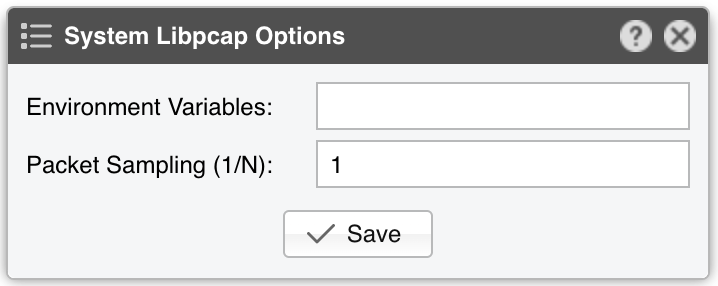

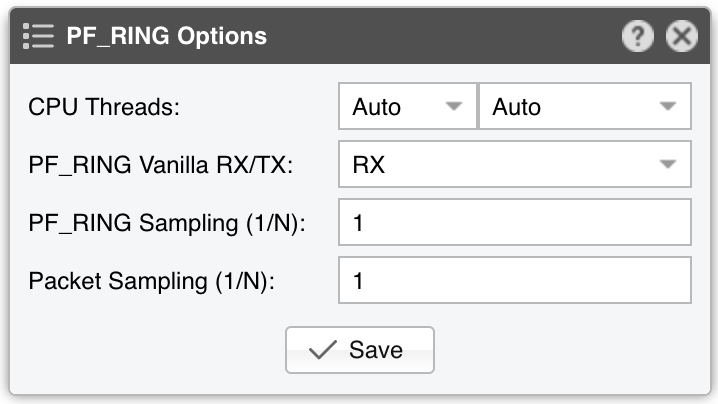

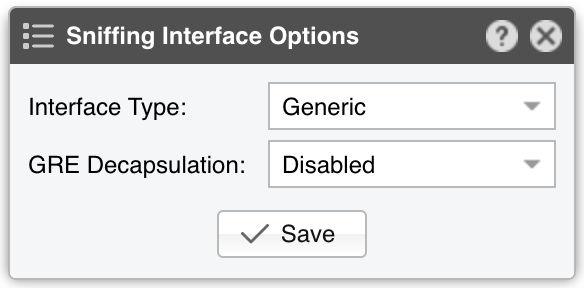

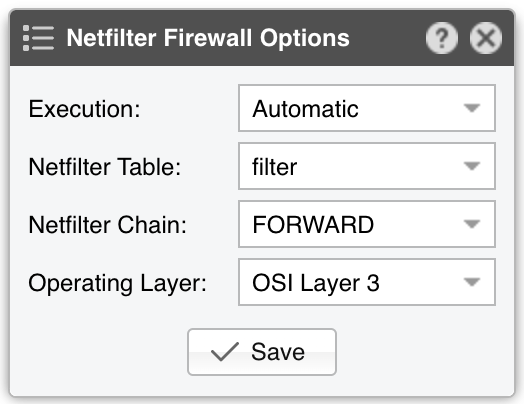

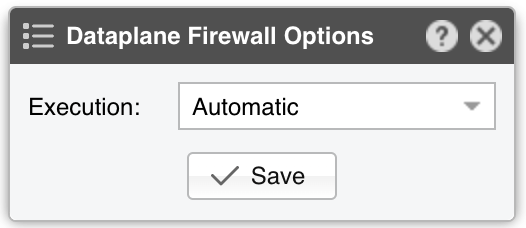

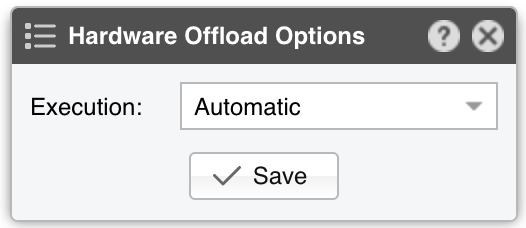

● Filter Name – A short name to help you identify the Packet Filter● Filter Color – The color used in graphs for the Packet Filter. The default color is a random one. You can change it from the drop-down menu● Filter Visibility – Toggles the listing inside the Reports » Devices panel● Device Group – Enter a description if you wish to organize components (e.g. by location, characteristics) or to permit fine-grained access for roles● Filter Server – Select a server that fulfills the minimum system requirements for running the Packet Filter. If this is not the Console server, follow the NFS configuration steps to make the raw packet data visible in the web interface● Capture Engine – Select the preferred packet capturing engine:▪ Embedded Libpcap – Select to use the built-in libpcap library. Libpcap requires no additional setup, but it cannot run on multiple threads, and it may be too slow for multi-gigabit sniffing• Packet Sampling (1/N) – Must contain the packet sampling rate. On most systems, the correct value is 1▪ System Libpcap – Select to use the libpcap library provided by the Linux distribution• Environment Variables – Some NIC drivers provide their own libpcap but require an environment variable to be set• Packet Sampling (1/N) – Must be equal to the number of filtering servers activated when the Packet Filter is used in a clustered architecture where each filtering server receives traffic from a round-robin packet scheduler. The correct value in most cases is 1▪ PF_RING – Select to use the PF_RING framework to speed up packet processing. PF_RING is much faster than Libpcap, but it requires the installation of additional kernel modules and supports only a limited number of NICs. PF_RING ZC is not supported by Packet Filter because ZC isolates the sniffed interface from the kernel, so the kernel won’t be able to perform packet switching, routing or filtering. Click the button on the right for PF_RING-specific options:• CPU Threads – Packet Filter can run multi-threaded on a given set of CPU cores. Each thread increases the RAM usage. On most systems, activating more than 6 CPU threads hurts performance. The number of threads used by each Filter instance is also dependent on the number of RSS queues• PF_RING Vanilla RX/TX – PF_RING can consider only those packets matching the specified direction• PF_RING Sampling (1/N) – PF_RING can sample packets directly into the kernel, which is much more efficient than doing it in user-space• Packet Sampling (1/N) – Must be equal to the number of filtering servers activated when the Packet Filter is used in a clustered architecture where each filtering server receives traffic from a round-robin packet scheduler. The correct value in most cases is 1▪ DPDK – Select to use the DPDK framework, then click the button on the right of the Capture Engine field to configure DPDK-specific parameters as described in Appendix 1▪ DPDK [Packet Sensor] – Select to use a DPDK-enabled Packet Sensor as a packet capturing engine. DPDK offers NIC access exclusively to a single process, so this option embeds the Packet Filter’s logic into a Packet Sensor in order to have both Wanguard components listening to the same interface● Sniffing Interface – The network interface(s) listened by the Packet Filter. Libpcap accepts only a single interface. PF_RING allows listening to multiple physical interfaces simultaneously when the interfaces are separated by a comma (“,”). Listening to the Inbound Interface increases CPU usage because even the traffic that doesn’t get forwarded will be inspected. Listening to the Outbound Interface decreases CPU usage because only the forwarded traffic reaches that interface; in this case, Packet Filter may still be able to obtain traffic statistics about the dropped traffic from the NIC’s counters• Interface Type – Set accordingly when the Sniffing Interface is a TUN/TAP interface or a GRE interface• GRE Decapsulation – When enabled, Packet Filter will analyze the encapsulated packet, not the GRE packet itself● Filtering Interface – Select on which interface to apply the filtering rules:▪ None – Packet Filter detects and reports filtering rules, but it doesn’t apply them to a firewalled interface▪ Inbound interface – Packet Filter applies the filtering rules on the inbound interface, which is defined below▪ Outbound interface – Packet Filter applies the filtering rules on the outbound interface, which is defined below● Traffic Diversion – Provides a selection of BGP Connectors that can be used for traffic diversion. If the server is deployed inline, or if you do not plan to deploy the server in a Side Filtering topology, leave this parameter set to Off● Inbound Interface – Enter the interface that receives the incoming/ingress traffic. This parameter can be omitted if the Filtering Interface is the same as the Outbound Interface. Bridged interfaces should have the string “physdev:” prepended in front of the interface’s name● Outbound Interface – The cleaned traffic is sent to the downstream router/switch via the outbound interface, which should hold the route to the default gateway. This parameter can be omitted if the Filtering Interface is the same as the Inbound Interface. Bridged interfaces should have the string “physdev:” prepended in front of the interface’s name● BGP Flowspec – Select which policy to apply when the Response is configured to send BGP Flowspec announcements for filtering rules. The rate-limit policy works only for bits/s anomalies; anomalies detected for pkts/s will have the traffic matched by the filtering rule fully discarded● Netfilter Firewall – Packet Filter can leverage the Netfilter framework included in the Linux kernel to perform software-based packet filtering and packet rate limiting. Netfilter is very flexible, and because Packet Filter does not make any use of the connection tracking mechanism specific to stateful firewalls, it is also very fast▪ Disabled – Packet Filter detects and reports filtering rules, but the Netfilter firewall API is not used▪ Filtering rules drop matched traffic. Valid traffic is accepted – Packet Filter detects, reports, and applies filtering rules using the Netfilter firewall. If the filtering rule is not whitelisted, then the traffic matched by it is blocked, and the remaining traffic is allowed to pass▪ Filtering rules drop matched traffic. Valid traffic is rate-limited – Packet Filter detects, reports, and applies filtering rules, and rate-limits the remaining traffic. If the filtering rule is not whitelisted, the traffic matched by it is blocked. The traffic that exceeds the packets/second threshold value is not allowed to pass. Netfilter supports rate-limiting only for packets/s thresholds, not for bits/s thresholds. Note that some kernel versions will fail to rate-limit traffic above 10000 pkts/s and will block all traffic instead▪ Filtering rules rate-limit matched traffic. Valid traffic is accepted – Packet Filter detects and reports filtering rules and rate-limits matched traffic to the threshold value. Netfilter supports rate-limiting only for packets/s thresholds, not for bits/s thresholds. Note that some kernel versions will fail to rate-limit traffic above 10000 pkts/s and will block all traffic instead▪ Apply the default Netfilter chain policy – Packet Filter detects and reports filtering rules and applies them to the firewall using the default Netfilter chain policy. The Netfilter framework is still being used, but all rules have the RETURN target. This option is used exclusively for testing purposesWhen using the Netfilter Firewall, the following options become available:• Execution – Filtering rules can be applied automatically without end-user intervention, or manually by a user that clicks the Netfilter icon in Reports » Tools » Anomalies• Netfilter Table – The raw option requires both Inbound and Outbound interfaces to be set, and it may not work for virtual interfaces. It provides a better packet filtering performance compared to the filter option• Netfilter Chain – Set to FORWARD if the server forwards traffic, or INPUT if it does not• Operating Layer – Set to OSI Layer 2 if the server is configured as a bridge, or OSI Layer 3 otherwise● Dataplane Firewall – This parameter sets the filtering policy of the Dataplane Firewall, a built-in software-based firewall that uses DPDK. It is better performing than Netfilter but less flexible and harder to configure• Execution – Filtering rules can be applied automatically without end-user intervention, or manually by a user that clicks the Dataplane icon in Reports » Tools » Anomalies● Hardware Offload – Select the appropriate option if you have a NIC that provides hardware filters. Since hardware filters do not consume CPU cycles, use this feature to complement the Netfilter Firewall and Dataplane Firewall▪ Disabled – Hardware filters are not applied▪ DPDK Flow API – Packet Filter uses the Flow API provided by the DPDK framework to offload the filtering of packets to the hardware▪ Chelsio T5+ 10/40/100 Gigabit adapter with LE-TCAM filters – Packet Filter uses the cxgbtool utility to apply up to 487 filtering rules that may contain any combination of source/destination IPv4/IPv6 addresses, source/destination UDP/TCP port, and IP protocol. This utility is installed by the Chelsio Unified Wire driver. Drop traffic counters are available for packets, not for bytes▪ Mellanox ConnectX NIC with OFED driver – Packet Filter uses the /opt/mellanox/ethtool/sbin/ethtool utility to apply up to 924 filtering rules that may contain any combination of source/destination IPv4/IPv6 addresses, source/destination UDP/TCP port, and IP protocol. The ethtool utility is installed in the specified path by the OFED driver from Mellanox. Drop traffic counters are not available▪ Intel x520+ 1/10/40 Gigabit adapter configured to block IPv4 sources – Packet Filter programs the Intel chipset to drop IPv4 addresses from the filtering rules that contain source IPs. Up to 4086 hardware filters can be used. Drop traffic counters are not available▪ Intel x520+ 1/10/40 Gigabit adapter configured to block IPv4 destinations – Packet Filter programs the Intel chipset to drop IPv4 addresses from the filtering rules that contain destination IPs. Up to 4086 hardware filters can be used. Drop traffic counters are not availableWhen using Hardware Offload, the following option becomes available:• Execution – Filtering rules can be applied automatically without end-user intervention, or manually by a user that clicks the NIC chipset icon in Reports » Tools » Anomalies● Whitelist – It contains a collection of rules created to prevent the blocking of critical traffic, see the dedicated Whitelist Template chapter for details● Comments – Comments about the Packet Filter can be saved here. These observations are not visible elsewhere

Enable the Packet Filter by clicking the small on/off button displayed next to its name in the Configuration » Components panel. When a traffic anomaly triggers the Response action “Detect filtering rules and mitigate the attack with Wanguard Filter”, a Packet Filter instance will be launched automatically. If no traffic anomaly requires the running of a Filter instance, Reports » Devices » Overview will show the message “No active instance”.

Note

The firewalls supported by Packet Filter can be tested in Reports » Tools » Firewall by clicking the [Add Firewall Rule] button.

21.1. Packet Filter Troubleshooting¶

[root@localhost ~]# sysctl -w net.bridge.bridge-nf-call-ip6tables=1 [root@localhost ~]# sysctl -w net.bridge.bridge-nf-call-iptables=1 [root@localhost ~]# sysctl -w net.bridge.bridge-nf-filter-vlan-tagged=1

[root@localhost ~]# sysctl -w net.ipv4.ip_forward=1 [root@localhost ~]# sysctl -w net.ipv4.conf.all.forwarding=1 [root@localhost ~]# sysctl -w net.ipv4.conf.default.rp_filter=0 [root@localhost ~]# sysctl -w net.ipv4.conf.all.rp_filter=0

[root@localhost ~]# iptables -L -n -v && iptables -L -n -v -t raw

[root@localhost ~]# for chain in `iptables -L -t raw | grep wanguard | awk '{ print $2 }'`; do iptables -X $chain; done

[root@localhost ~]# ethtool --show-ntuple <filtering_interface>

[root@localhost ~]# ethtool --show-nfc <filtering_interface>

Location out of range errors from the ixgbe driver, load it with the right parameters in order to activate the maximum number of 8k filtering rules[root@localhost ~]# cxgbtool <filtering_interface> filter show

License key not compatible with the existing server indicates that the server is unregistered and you need to send the string from Configuration » Servers » [Server] » Hardware Key to sales@andrisoft.com